Before we dive in: I share practical insights like this weekly. Join developers and founders getting my newsletter with real solutions to engineering and business challenges.

Due to personal reasons, I've become deeply interested in improving prenatal testing accuracy. What started as trying to understand why certain tests failed led me down a rabbit hole of genomics, AI, and some frankly shocking statistics about current testing limitations.

After months of research, I'm now building an AI-enhanced prenatal testing system, backed by an Emergent Ventures grant. The technical challenges are substantial, the market opportunity is massive, and the potential impact on families makes this feel like the most important project I've tackled.

The Scale of the Problem

The numbers around current prenatal testing are more concerning than most people realise. The UK alone sees around 700,000 pregnancies annually, with most requiring some form of genetic screening. The combined test - the standard NHS screening - has a false positive rate that leads to approximately 2-5% of low-risk pregnancies being flagged for invasive follow-up procedures.

More problematically, test failures requiring repeat sampling occur in 3-8% of cases, depending on maternal factors like BMI and gestational age. Understanding how long NIPT test results take - typically 7-14 days for standard tests - becomes crucial when failures mean families face additional weeks of uncertainty. When you scale this globally - the prenatal testing market is worth around £20 billion and growing at 8-10% annually - even small improvements in accuracy translate to helping tens of thousands of families avoid unnecessary anxiety and medical procedures.

The technical root cause is straightforward: current methods analyse cell-free DNA fragments in maternal blood using relatively simple statistical approaches developed in the early 2000s. These struggle with low foetal DNA concentrations (typically 3-20% of total cell-free DNA) and contamination patterns that vary significantly between individuals.

What struck me during research was how little modern AI has been applied to this problem. Most innovation has focused on expanding access rather than improving the fundamental accuracy of fragment analysis. The cost of noninvasive prenatal testing has decreased significantly, but accuracy improvements have lagged behind what's technically possible with today's AI capabilities.

Current Technical Approach

I've spent considerable time researching the technical landscape, and my current thinking centres around using transformer models for DNA fragment pattern recognition. This will almost certainly evolve as I dig deeper, but the initial approach involves several key components.

Synthetic Data Generation: This is probably the most critical piece. Real genomic data is both highly sensitive and limited in quantity for training robust models. I'm building a pipeline to generate realistic synthetic cell-free DNA samples that capture the complexity of real data whilst protecting privacy completely.

The synthetic generation needs to model several factors: maternal DNA contamination patterns, foetal DNA concentration variations, fragment length distributions, and the specific genomic regions that indicate chromosomal abnormalities. Based on published research, I'm starting with models that can generate fragments across chromosomes 13, 18, 21, and sex chromosomes - the primary targets for current screening. I will then work to increase this to the top ~50 conditions.

Fragment Analysis Architecture: My current plan uses transformer models adapted for sequence analysis, though this might change significantly as I experiment. The key insight is that current methods treat each fragment independently, whilst modern attention mechanisms can identify complex patterns across multiple fragments that indicate genetic conditions.

I'm starting with a multi-head attention architecture that processes variable-length sequences of DNA fragments, learning representations that capture both individual fragment characteristics and broader patterns across the sample. The model will need to handle significant noise - most fragments are maternal DNA that should be filtered out.

Quality Assessment Framework: One major gap in current testing is knowing when to recommend retesting rather than providing potentially inaccurate results. I'm building confidence estimation into the model architecture, so it can identify samples where uncertainty is too high for reliable diagnosis.

This involves both model uncertainty (epistemic) and data uncertainty (aleatoric) - distinguishing between "the model isn't confident" and "this sample is genuinely ambiguous." The clinical workflow implications are significant, as better uncertainty quantification could dramatically reduce both false positives and test failures. This is particularly important for understanding when NIPT test results might be wrong for conditions like Down syndrome.

Development Plan and Phases

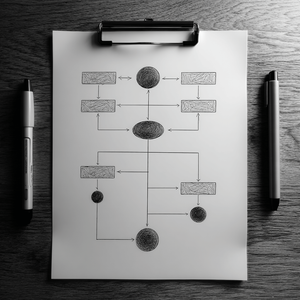

The path from current research to clinical implementation is necessarily long, but I've mapped out a structured approach across three main phases.

Phase 1: Synthetic Data and Model Development. Building the synthetic data generation pipeline and initial model architectures. This involves extensive validation against published datasets to ensure synthetic data captures real-world complexity. I'll be using Claude Code and Grok heavily here - whilst I have strong AI background, genomics isn't my primary domain, so AI-assisted development will be crucial for rapid iteration.

The goal is to demonstrate that transformer models can outperform current statistical methods on historical datasets before moving to any real-world validation.

Phase 2: Real Data Validation. Working with clinical partners to validate the approach on retrospective datasets. This requires navigating significant regulatory and privacy constraints - real genomic data requires approval from research ethics committees and strict data handling protocols.

The validation needs to demonstrate not just improved accuracy, but also better handling of edge cases that cause current test failures. Success metrics include reduced false positive rates, lower test failure rates, and improved sensitivity for detecting actual chromosomal abnormalities.

Phase 3: Clinical Trials. Prospective clinical validation comparing the AI-enhanced approach against current standard care. This is where regulatory approval processes begin in earnest, requiring extensive documentation of model training, validation procedures, and clinical safety protocols.

Market Dynamics and Opportunity

The prenatal testing landscape is dominated by a few major players - Illumina, Roche, PerkinElmer - but most innovation has been incremental. The core technology hasn't fundamentally changed in over a decade, despite massive advances in AI during the same period.

What's particularly interesting is the market segmentation. The NHS and other public health systems prioritise cost-effectiveness and broad access, whilst private markets focus on comprehensive testing panels. An AI approach that improves accuracy whilst maintaining cost-effectiveness could disrupt both segments. Current panorama prenatal test insurance coverage varies significantly, creating opportunities for more accessible, accurate alternatives.

The regulatory pathway varies significantly by region. The FDA has approved several AI-based diagnostic tools in recent years, suggesting increasing acceptance of ML approaches in clinical settings. The EU's MDR framework is more stringent but provides clearer guidance for AI-based medical devices.

From a business perspective, the recurring revenue model is compelling - each test generates revenue, and improved accuracy could justify premium pricing. The technical moat comes from the model training and clinical validation, which require significant time and capital investment.

Personal Motivation and Emergent Ventures

The personal drive here is substantial - I'm not going to stop until this is solved. Having experienced the limitations of current testing firsthand, there's clear motivation to build something genuinely better.

This work is supported by an Emergent Ventures grant, which has been invaluable for focusing on this challenge without immediate commercial pressure. Emergent Ventures, run by Tyler Cowen at George Mason University, backs unconventional projects that might struggle with traditional funding. I've received previous grants from the programme, and it consistently impresses me with the quality of people and projects they support.

For those considering applying, understanding the difference between Emergent Ventures grants vs fellowship opportunities is important - grants provide funding for specific projects, whilst fellowships support individuals over longer periods. The Emergent Ventures Unconference remains one of the most valuable experiences for connecting with other grant recipients and exploring ambitious ideas across disciplines.

The programme's approach - backing individuals rather than just ideas, encouraging bold thinking, and providing both funding and community - makes it perfect for this kind of ambitious technical challenge. The other grant recipients work on everything from AI safety to novel manufacturing techniques, creating an environment where tackling complex problems feels not just possible but expected.

The AI + Biotech Intersection

This project sits at the intersection of AI and biotech, which represents one of the most promising areas for impact in the coming years. We're seeing breakthroughs in drug discovery, protein folding, and diagnostic accuracy. Applying these techniques to prenatal testing feels like a natural evolution.

What excites me technically is how much room there is for improvement. Current methods use basic statistical approaches that were state-of-the-art twenty years ago. Modern techniques - attention mechanisms, transfer learning, advanced uncertainty quantification - simply weren't available when these protocols were developed.

The constraint isn't computational - genomic analysis doesn't require massive models. The constraint is data quality, regulatory approval, and clinical validation. These are solvable problems, but they require patient, methodical work rather than rapid iteration.

Current Status and Next Steps

Development is just beginning, but the technical foundation is solid. I'm starting with synthetic data generation and model architecture experiments, validating against published datasets before any real-world testing.

The immediate focus is building the data pipeline and initial transformer models. Every major decision will be documented as I build it - both the successes and the inevitable dead ends. This aligns with my broader approach to building systems methodically and transparently. Given the regulatory requirements, maintaining detailed documentation from the beginning is essential.

I expect the technical approach to evolve significantly as I learn more about the domain. The transformer architecture might change, the synthetic data approach will definitely be refined, and the clinical validation strategy will adapt based on regulatory feedback.

If anyone reading this works in genomics research, has experience with clinical AI validation, or is interested in AI applications in healthcare, I'd love to hear from you. The intersection of these fields is moving rapidly, and there's always more to learn from people working on adjacent problems.

This feels like the kind of project where success could genuinely improve outcomes for families - which makes the substantial technical challenges ahead feel entirely worthwhile.