Before we dive in: I share practical insights like this weekly. Join developers and founders getting my newsletter with real solutions to engineering and business challenges.

I've been using AI coding tools heavily over the past year. Claude, Cursor, GitHub Copilot - they're all part of my daily workflow now. But I kept hitting the same frustrating pattern.

I'd start a new project, and the AI would make the wrong default choices. It would suggest SQLite when I always use Postgres. It would structure tests differently than my standard approach. It would ignore my Redis connection pooling preferences. Every single time, I'd have to stop and say "no, do it this way instead."

The back-and-forth was killing my flow.

At first, I thought this was just my problem. But then I started talking to other teams. The pattern was everywhere. Engineering managers were frustrated watching their teams use AI tools that had no idea about the company's architectural decisions. New developers were getting AI-generated code that violated team standards. Senior developers were spending hours in code reviews fixing the same AI-suggested mistakes over and over.

I tried the usual solutions. Created .cursorrules files. Maintained claude.md documents. Set up detailed context files for each project. But here's the thing: this doesn't scale across a team. You've got 10 developers using 4 different AI tools. Standards evolve - you update your password hashing approach, and now someone needs to update every developer's local config files. Within three months, everyone's got their own interpretation of "team standards."

And even if you could keep everything in sync, you hit another problem: you can't just dump everything into a 1000-line config file. The AI needs specific context at the right time, not a wall of text up front.

That's when I had the insight: what if there was a single source of truth for team knowledge that could serve the right context to any AI tool, exactly when it needs it?

So I built Cont3xt.dev.

The Wrong Debate

Everyone's obsessing over which is the best LLM for coding. Is it Claude? GPT-4? The latest top code LLM from OpenAI? Teams spend hours debating whether to standardise on one coding LLM or let developers choose their own. Engineering leads compare benchmarks trying to find the best AI LLM for coding.

But they're asking the wrong question.

The problem isn't which model you're using. The problem is that none of them have your context. I've watched teams switch from one LLM model for coding to another, hoping the newer model would somehow know their architectural decisions. It doesn't matter if you're using the best model for code if it doesn't know you always use Postgres, or that you deprecated a particular authentication pattern three months ago.

A mid-tier programming LLM with proper context will outperform the most advanced model without it. Every single time.

The Real Cost of Context Failures

The numbers from teams I spoke to were eye-opening. Senior developers spending 10+ hours per week answering the same architectural questions. "Why did we choose Postgres?" "How do we handle authentication?" "What's our Redis pooling strategy?" These are questions AI tools should be able to answer, but they can't because they don't have access to the team's knowledge.

New developers take weeks to learn team conventions. They make mistakes AI should prevent. They ask questions AI should answer. They write code that doesn't match team patterns. When I wrote about AI for coding, I focused on individual productivity. But the team angle is where the real pain lives.

PR reviews became a nightmare. About 38% of PRs were getting rejected for not following unwritten rules - rules the AI confidently violated because it had no idea they existed. Security policies, deprecated patterns, architectural decisions made months ago in a Slack thread - all invisible to the AI.

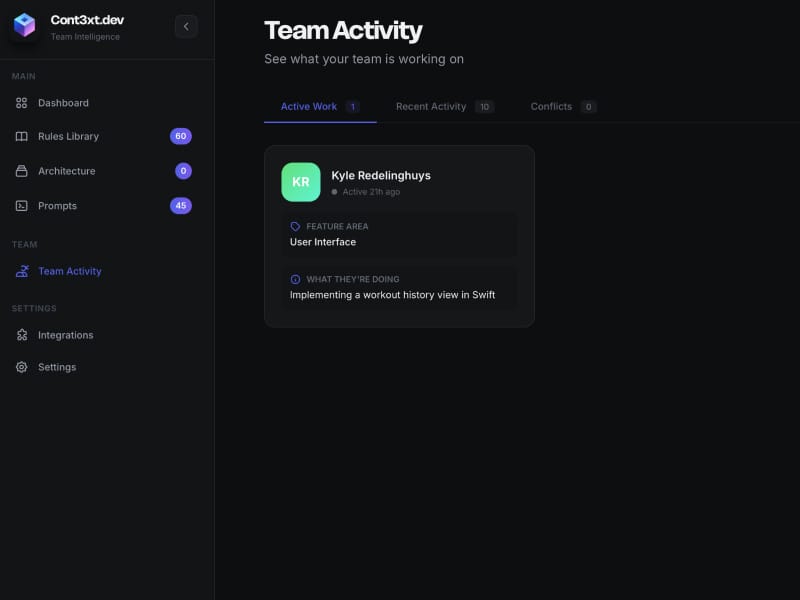

And here's something I didn't expect: teams had no visibility into what each other were working on. Multiple developers would unknowingly work on the same code. The AI would suggest changes that conflicted with work in progress. Merge conflicts tripled.

The manual workarounds proved there was demand. Teams were maintaining separate config files for Cursor, GitHub Copilot, Claude Code, and VS Code extensions. Some teams had built sophisticated "Memory Bank" systems with structured files. But coordinating updates across 50 developers using 6 different AI tools? That's maintenance hell.

What I Built

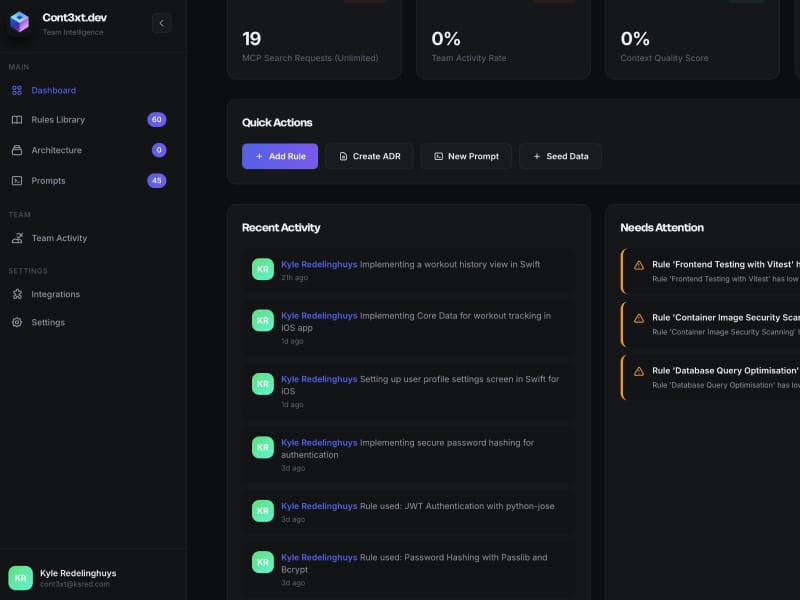

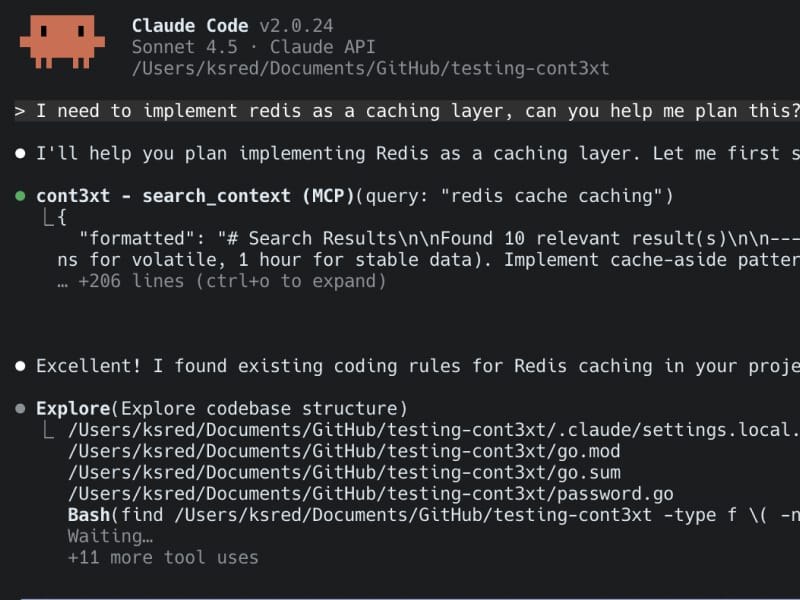

Cont3xt.dev is a universal context management platform. Single source of truth for team knowledge that automatically serves relevant context to all AI tools via the Model Context Protocol.

I previously built an MCP server for personal memory, which taught me how powerful the protocol could be. But this needed to work at team scale.

The platform captures three types of knowledge:

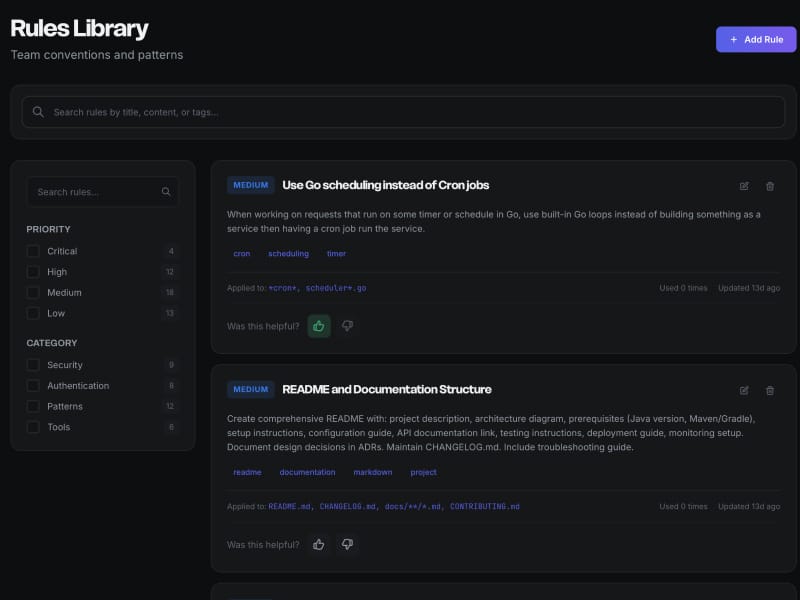

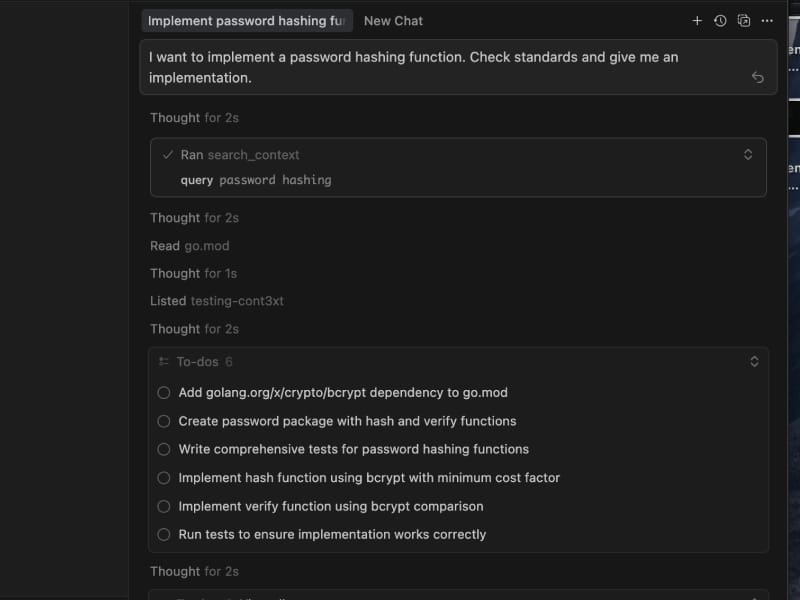

Context Rules are your coding standards, architectural patterns, security requirements, and team conventions. They're priority-weighted from 1 (Critical) to 5 (Nice-to-Know). You can attach file patterns, tags, and categories. For example: "Always use bcrypt for password hashing with cost factor 12+" with priority 1 for security-critical code.

Architectural Decision Records (ADRs) document why you made specific technical choices. The context, the decision, the consequences, and the current status. These capture the reasoning that prevents teams from revisiting settled decisions. When someone asks "Why Postgres instead of MongoDB?" the AI can point to ADR-001 with the full reasoning.

Prompt Library stores tested, team-approved prompts for common tasks. "Generate Go table-driven tests" or "refactor this function for testability." These ensure consistent output quality across the team.

Instead of maintaining tool-specific config files, teams define their context once in Cont3xt.dev, and every AI assistant receives the right context at the right time.

This works with any coding LLM. Whether your team prefers Claude, uses GitHub Copilot, or switches between multiple LLMs for coding, they all get the same context. The debate about which is the best LLM for code becomes less relevant when they all have access to your team's knowledge.

The Technical Breakthrough

The core innovation isn't just storing context - it's serving the right context at the right time without overwhelming AI tools' token budgets.

I went with Postgres and pgvector for the storage layer. Since I'm already using Postgres, adding vector search capability was obvious. No need for a separate system. The hybrid search combines vector embeddings with SQL full-text search using a 60/40 weighting. Vector search excels at conceptual matches ("How do we handle caching?" finds Redis patterns), whilst keyword search catches exact technical terms ("bcrypt cost factor" returns password hashing rules).

Getting the balance right was tricky. Sometimes searches wouldn't return stuff when you expected it, other times they'd return irrelevant results. I went with "return stuff that might not be relevant, let the LLM filter" instead of "don't return stuff that might be relevant." Better to have too much than too little.

The big win was local embeddings. OpenAI's embedding API is good, but adding that latency to every search query was too much. I implemented a local embedding service that brought response times down from several hundred milliseconds to 10-20ms. It was surprisingly easy - only about a hundred lines of code - with a fallback to OpenAI if the local service goes down. Since every search query gets embedded, speed matters.

I built a production-ready Go package for LLM integration previously, which helped here. The architecture is clean: hybrid search queries across Rules, ADRs, and Prompts in a single operation, returning the top 10 most relevant results regardless of type.

How It Actually Works

The user experience is seamless. Your AI tool queries Cont3xt.dev when it needs context - you don't have to think about it. I've been using it across multiple projects for a few weeks now, and it's transformed my workflow.

I start a new project. The AI immediately knows I use Postgres, knows my preferred folder structure, knows how I write tests. No back-and-forth. No "stop, do it this way instead." I can move faster while knowing my standards are in place.

The team coordination features were something I added after realising how often developers unknowingly work on the same code. You can see who's working on what in real-time. The AI can check if someone else is already refactoring the auth module before suggesting changes.

What really surprised me was how much time this saved on answering questions. Those "Why did we make this decision?" questions that senior developers field constantly? The AI handles them now by surfacing the relevant ADR.

Why This Matters for Teams

The individual productivity gains are great, but the team benefits are where this gets interesting.

Onboarding time collapses. New developers have immediate access to all team conventions through their AI tools. They write code that matches team patterns from day one. The ramp-up period drops from weeks to days.

PR reviews become faster. When AI tools follow team standards automatically, fewer PRs get rejected for style or pattern violations. Code reviews focus on logic and design instead of pointing out the same mistakes.

Knowledge preservation happens automatically. With GitHub integration, architectural decisions get extracted from PR discussions and suggested as ADRs. Nothing gets lost in Slack threads anymore.

Standards stay consistent. Update a rule once, every developer's AI tools immediately use the new standard. No more chasing people down to update their local config files. Whether they're using different coding LLMs or the same one, everyone gets the same standards.

The metrics from early teams using this have been solid. Context failures dropped significantly. Time spent answering repeated questions fell by hours per week. And new developers started contributing meaningful code much faster.

Available Now

Cont3xt.dev is live at cont3xt.dev. There's a free tier for individual developers, and team plans start at 5 seats. The 14-day trial gives you full access to team features with no credit card required.

The MCP integration works with Cursor, Claude Code, GitHub Copilot, VS Code, JetBrains AI, and any MCP-compatible tool. Connect once, work everywhere. No manual configs to maintain.

I built this because I needed it. The manual workarounds weren't cutting it, and the team scaling problem was clear. If you're dealing with AI tools that don't know your team's standards, or you're spending hours in PR reviews catching the same mistakes, this might help.

The Shift

We're moving from manual context management to intelligent context delivery. From every developer maintaining their own config files to teams sharing a single source of truth. From AI tools that confidently suggest anti-patterns to AI tools that understand your team's way of doing things.

The context problem has been the limiting factor in AI coding tools. Teams waste time debating which is the best LLM for programming when the real issue is that none of them have proper context. With intelligent context management, it doesn't matter if you're using Claude, GPT-4, or the latest top code LLM - they all become significantly more useful when they understand your team's standards.

They stop being "fast typists with no context" and start being "team members who know how we work."

Give it a try. I'd love to hear what you think.